A self-driving car, also known as an autonomous car or driverless car, represents a significant leap in automotive technology. These vehicles utilize a sophisticated blend of sensors, cameras, radar, and artificial intelligence (AI) to navigate roads and reach destinations without human intervention. To be truly autonomous, these cars must operate on standard roads, without requiring special adaptations, to a pre-set location.

The emergence of self-driving cars holds the potential to reshape transportation and infrastructure dramatically. Imagine reduced traffic congestion, fewer accidents, and the rise of innovative transportation services like self-driving ride-sharing and trucking.

Leading the charge in developing and rigorously testing autonomous vehicles are companies like Audi, BMW, Ford, Google, General Motors, Tesla, Volkswagen, and Volvo. Waymo, a self-driving project under Google’s parent company, Alphabet Inc., stands out with its extensive testing, deploying fleets of autonomous vehicles, including Toyota Prius and Audi TT models, across hundreds of thousands of miles of roads and highways.

The Core Programming of Driverless Cars

At the heart of every self-driving car lies a complex AI system, meticulously programmed to mimic and surpass human driving capabilities. Developers leverage vast datasets from image recognition systems, coupled with advanced machine learning and neural networks, to construct these autonomous driving systems.

Neural networks are crucial for identifying patterns within the immense data streams fed to machine learning algorithms. This data originates from a suite of sensors, including radar, lidar (light detection and ranging – a remote sensing technology for measuring distances), and cameras. These sensors continuously gather information that the neural network uses to learn and recognize critical elements of the driving environment – traffic signals, pedestrians, street signs, curbs, trees, and more.

An autonomous vehicle uses a network of sensors to perceive its surroundings, including other vehicles, pedestrians, curbs, and road signs. The vehicle’s programming then constructs a detailed map of its environment, enabling it to understand its location relative to everything around it. This is followed by path planning, where the system calculates the safest and most efficient route to the destination, adhering to traffic laws and avoiding obstacles. Geofencing technology, which defines virtual boundaries for navigation, is also integral to ensure vehicles operate within specified areas.

Geofencing in these vehicles employs Global Positioning System (GPS) or similar location-based technologies to establish virtual perimeters around geographical zones. These geofences trigger automated responses or alerts when a vehicle crosses into or out of these defined areas. In automotive applications, geofencing is frequently used for managing vehicle fleets, tracking locations, and enhancing driver safety protocols.

Waymo’s approach exemplifies this sensor fusion, utilizing a combination of sensors, lidar, and cameras. By merging the data from these systems, the vehicle can identify every object in its vicinity and even predict the future movements of these objects – all within fractions of a second. Experience is paramount; the more miles the system drives, the more data it accumulates, which is then fed into deep learning algorithms. This continuous learning process refines the system’s ability to make increasingly nuanced and sophisticated driving decisions.

Here’s a simplified breakdown of how Waymo’s self-driving system operates:

- Destination Input: The driver or passenger inputs the desired destination, and the car’s software calculates the optimal route.

- Lidar Mapping: A rotating lidar sensor, positioned on the roof, scans a 60-meter radius around the vehicle, generating a dynamic 3D map of the immediate environment.

- Position Tracking: A sensor on the rear wheel monitors lateral movement, precisely determining the car’s position within the 3D map.

- Radar Obstacle Detection: Radar systems in the front and rear bumpers measure distances to potential obstacles.

- AI Sensor Integration: AI software connects to all sensors, integrating data from Google Street View and onboard video cameras.

- AI Decision-Making: Employing deep learning, the AI emulates human perception and decision-making to control driving functions like steering and braking.

- Map Integration: The system consults Google Maps for advance information on landmarks, traffic signs, and traffic lights.

- Human Override: An override function is always available, allowing a human to regain manual control of the vehicle.

Cars with Self-Driving Features: Stepping Stones to Full Autonomy

The Waymo project showcases a near-fully autonomous vehicle, requiring a human driver primarily for emergency overrides. While highly autonomous, it’s not yet considered purely self-driving in all conditions. Current mass-produced vehicles are not fully autonomous due to technological hurdles, regulatory frameworks, and paramount safety considerations. Tesla, often credited with pushing the boundaries of self-driving technology, faces ongoing challenges despite offering advanced self-driving features, including technological complexities, sensor limitations, and ensuring absolute safety.

Many vehicles available to consumers today offer significant self-driving capabilities, albeit at lower levels of autonomy. These features are becoming increasingly common:

- Hands-free steering: Keeps the car centered in its lane without requiring the driver’s hands on the wheel, though driver attentiveness remains crucial.

- Adaptive Cruise Control (ACC): Automatically maintains a set distance from the vehicle ahead.

- Lane-centering steering: Corrects course if the vehicle drifts towards lane markings, gently steering it back.

- Self-parking: Utilizes sensors to autonomously steer, accelerate, and maneuver the car into parking spaces with minimal driver input.

- Highway Driving Assist: Combines ACC and lane-centering for highway driving.

- Lane-change assist: Monitors surrounding traffic to facilitate safe lane changes, either alerting the driver or automatically steering when safe.

- Lane Departure Warning (LDW): Alerts the driver if the vehicle begins to drift out of its lane without signaling.

- Summon: A Tesla feature that allows the vehicle to autonomously exit a parking space and drive to the driver’s location.

- Evasive-steering assist: Automatically steers to help the driver avoid imminent collisions.

- Automatic Emergency Braking (AEB): Detects likely collisions and applies brakes to prevent or mitigate accidents.

Car manufacturers integrating these autonomous and driver-assistance technologies include:

- Audi: Traffic Jam Assist manages steering, acceleration, and braking in heavy traffic.

- General Motors (Cadillac): Super Cruise offers hands-free highway driving.

- Genesis: Learns and replicates individual driver preferences in its autonomous driving features.

- Tesla: Autopilot includes LDW, lane-keep assist, ACC, park assist, Summon, and advanced self-driving capabilities.

- Volkswagen: IQ Drive with Travel Assist integrates lane-centering and ACC.

- Volvo: Pilot Assist provides semi-autonomous driving, lane-centering, and ACC.

Levels of Autonomy in Self-Driving Cars: A Spectrum of Automation

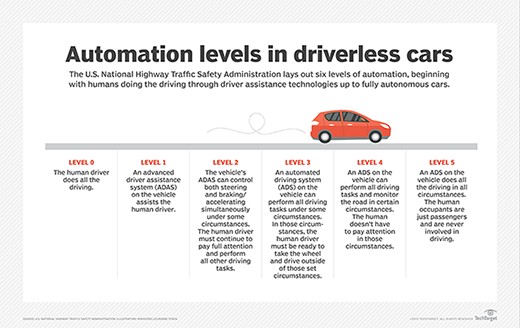

The Society of Automotive Engineers (SAE) defines six distinct levels of driving automation, providing a clear framework for understanding autonomous capabilities:

- Level 0: No Driving Automation: The human driver controls all aspects of driving.

- Level 1: Driver Assistance: The vehicle assists with either steering or acceleration/braking, but not simultaneously. The driver must remain engaged and in control.

- Level 2: Partial Driving Automation: The vehicle can manage two or more automated driving functions concurrently, like steering and acceleration/braking. The driver must be vigilant and ready to intervene at any moment.

- Level 3: Conditional Driving Automation: The vehicle can manage all driving tasks under specific conditions, such as highway driving. Human intervention is still required when the system requests it.

- Level 4: High Driving Automation: The vehicle can operate autonomously in defined scenarios without driver input or intervention. Driver input is optional in these situations.

- Level 5: Full Driving Automation: The vehicle is capable of driving itself under all conditions without any human input.

The U.S. National Highway Traffic Safety Administration (NHTSA) uses a similar classification system for driving automation levels.

The SAE levels of driving automation for autonomous vehicles, from 0 (no automation) to 5 (full automation).

The SAE levels of driving automation for autonomous vehicles, from 0 (no automation) to 5 (full automation).

Uses for Autonomous Vehicles: Beyond Personal Transportation

As of 2024, automotive technology has reached Level 4 autonomy. However, full public availability of Level 4 and Level 5 vehicles requires overcoming technological hurdles and addressing crucial regulatory and safety issues in the U.S. Despite these limitations for personal use, Level 4 autonomy is finding applications in other sectors.

Waymo, for instance, has partnered with Lyft to launch Waymo One, a fully autonomous commercial ride-sharing service. Operating in Phoenix, San Francisco, Los Angeles, and Austin, Texas, this service allows users to hail self-driving cars and provide valuable feedback to Waymo. While these vehicles still include a safety driver as a precaution, they represent a significant step towards fully autonomous transportation.

China’s Hunan province is seeing the deployment of autonomous street-sweeping vehicles, demonstrating Level 4 capabilities by independently navigating familiar environments with limited novel situations.

While manufacturer projections vary, widespread availability of Level 4 and 5 vehicles remains on the horizon. A truly Level 5 car must handle unexpected driving scenarios as effectively as, or better than, a human driver. Currently, around 30 U.S. states have enacted legislation concerning self-driving vehicles, addressing aspects such as testing, deployment, liability, and regulation, though the specifics vary by state.

The Pros and Cons of Self-Driving Cars: Weighing the Future

Self-driving cars represent a complex convergence of technological advancements, offering both significant benefits and posing notable challenges.

Benefits of Autonomous Cars: Enhancing Safety and Efficiency

Safety is often cited as the primary advantage of autonomous vehicles. U.S. Department of Transportation and NHTSA statistics for 2022 projected approximately 40,990 traffic fatalities, with 13,524 being alcohol-related. Autonomous cars have the potential to eliminate human error, such as drunk or distracted driving. However, they are still susceptible to other accident-causing factors, including mechanical failures.

Ideally, a future dominated by autonomous vehicles could see smoother traffic flow and reduced congestion. Occupants of fully automated cars could utilize commute times for productive tasks. Furthermore, autonomous vehicles could offer newfound independence to individuals unable to drive due to physical limitations, opening up employment opportunities requiring driving.

Autonomous trucks are being tested in initiatives like “truck platooning” in the U.S. and Europe, enabling autopilot over long distances, allowing drivers to rest or perform other tasks, enhancing safety, and improving fuel efficiency through cooperative ACC and collision avoidance systems.

Disadvantages of Self-Driving Cars: Concerns and Challenges

Potential downsides include initial unease with driverless vehicles. As autonomy becomes more prevalent, over-reliance on autopilot technology could lead to driver complacency and reduced preparedness to intervene during software or mechanical failures.

Notably, a Forbes survey indicated that self-driving vehicles are currently involved in twice as many accidents per mile compared to conventional vehicles.

Incidents, such as a 2022 video showing a Tesla crashing into a child test dummy during testing, and numerous reports of crashes involving Tesla’s Full Self-Driving mode, highlight ongoing safety concerns. One 2023 incident involved a Tesla Model Y in full self-driving mode hitting a student disembarking a bus, resulting in serious injuries.

Further challenges include the substantial costs associated with producing and testing these advanced vehicles and the ethical considerations of programming autonomous vehicles to make split-second decisions in critical situations.

Weather conditions also pose a challenge, as sensors can be obscured by dirt or blocked by heavy rain, snow, or fog, impacting their ability to gather environmental data.

Self-Driving Car Safety and Challenges: Navigating the Road Ahead

Autonomous cars must be programmed to recognize a vast array of objects, from minor road debris to animals and pedestrians. Navigational challenges include GPS interference in tunnels, construction zones causing lane changes, and complex decision-making in situations like yielding to emergency vehicles.

These systems must make instantaneous decisions about slowing down, swerving, or maintaining speed. Developers continue to address issues like hesitant or erratic behavior when objects are detected near roadways.

A fatal accident in March 2018 involving an Uber autonomous vehicle highlighted these challenges. The vehicle’s software identified a pedestrian but misclassified it as a false positive, failing to take evasive action. This incident prompted Toyota to temporarily suspend public road testing and shift focus to testing at its dedicated Michigan facility, established to further develop automated vehicle technology.

Accidents raise critical liability questions, which lawmakers are still working to resolve. Cybersecurity is another significant concern, with automotive companies actively working to mitigate hacking risks to autonomous vehicle software.

In the U.S., Federal Motor Vehicle Safety Standards, regulated by NHTSA, govern car manufacturing. China is taking a different approach, with government-led redesigns of urban infrastructure and policies to better accommodate self-driving cars. This includes regulations for human interaction with autonomous vehicles and leveraging mobile network operators to enhance data processing for navigation, a strategy facilitated by China’s centralized governance.

History of Self-Driving Cars: From Prototypes to Modern Autonomy

The journey toward self-driving cars began with incremental automation features focused on safety and convenience before 2000, such as cruise control and antilock brakes. Post-millennium, advanced safety features like electronic stability control, blind-spot detection, and collision/lane departure warnings became common. Between 2010 and 2016, driver assistance technologies like rearview cameras, automatic emergency braking, and lane-centering assistance emerged.

Since 2016, self-driving technology has advanced toward partial autonomy, incorporating lane-keeping assistance, adaptive cruise control, and self-parking capabilities. Tesla’s 2019 Smart Summon feature allowed vehicles to navigate parking lots autonomously, and the Full Self-Driving feature, while still a Level 2 system requiring driver attentiveness, represents further progress.

Modern vehicles now commonly feature ACC, AEB, LDW, self-parking, hands-free steering, lane-centering, lane change assist, and highway driving assist. Fully automated vehicles are not yet publicly available and may still be years away. NHTSA provides federal guidelines for introducing new Automated Driving Systems (ADS) on public roads, with these guidelines evolving alongside technological advancements.

Nevada became the first jurisdiction to permit driverless car testing on public roads in June 2011, followed by California, Florida, Ohio, and Washington, D.C. Around 30 U.S. states now have self-driving vehicle legislation.

Historically, the concept dates much further back, with Leonardo da Vinci designing a spring-powered, programmable, self-propelled robot car prototype around 1478, capable of preset courses.

Self-driving cars are built upon complex, interconnected systems. Explore how AI supports driving in autonomous vehicles.